Adaptive Multi-Objective Optimization-Based Coverage Path Planning Method for UUVs

-

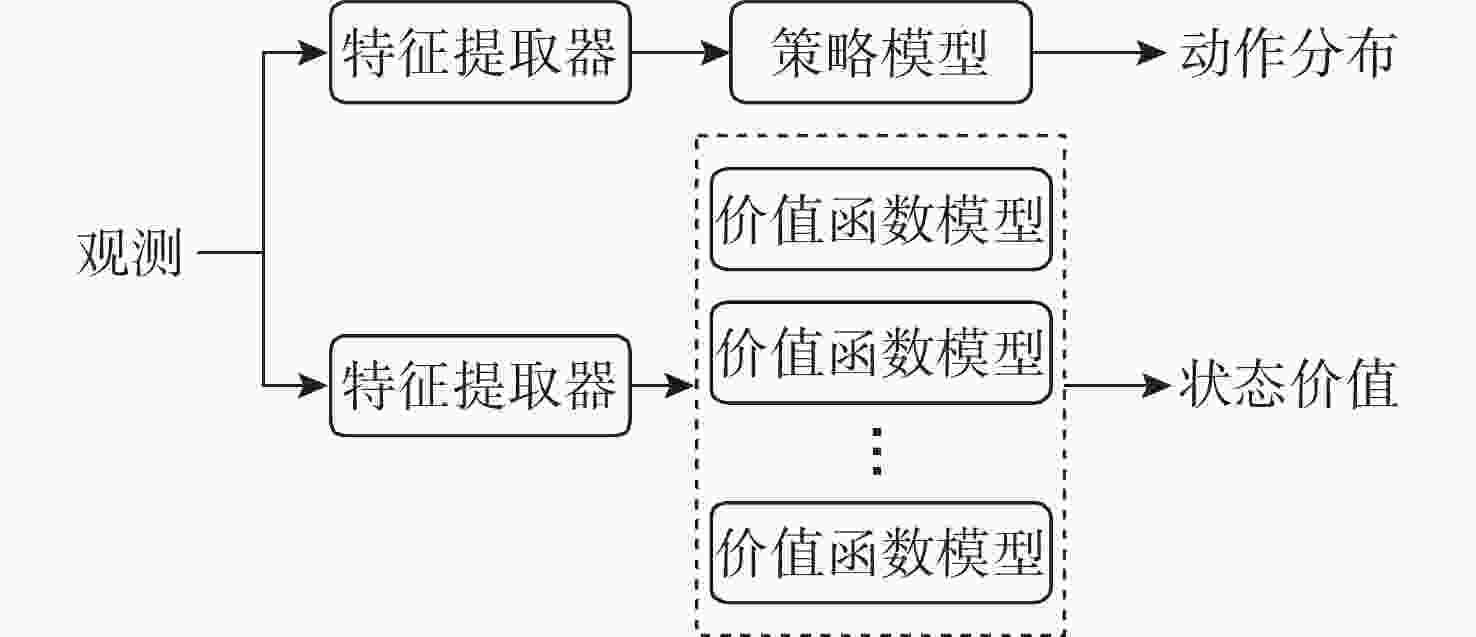

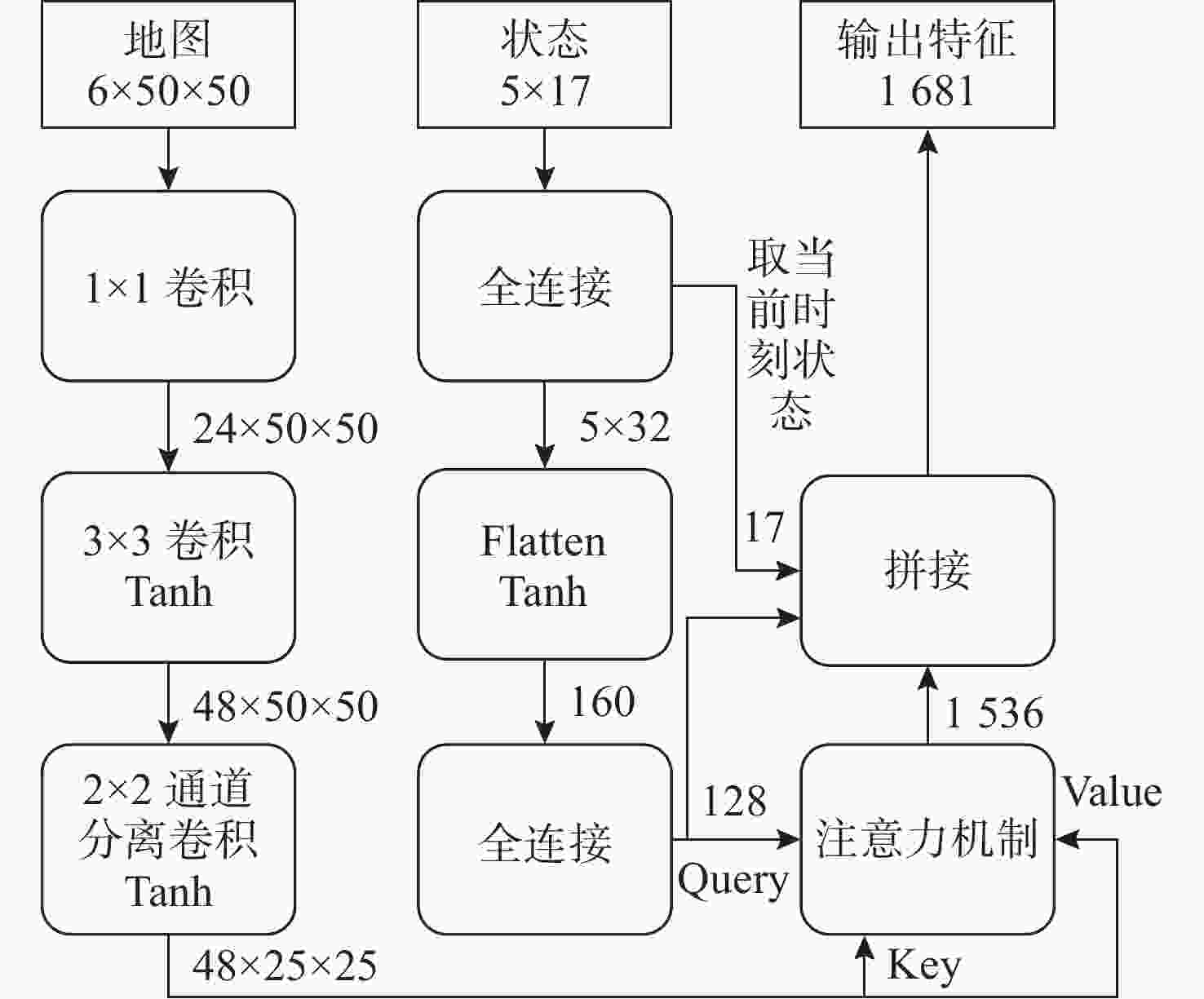

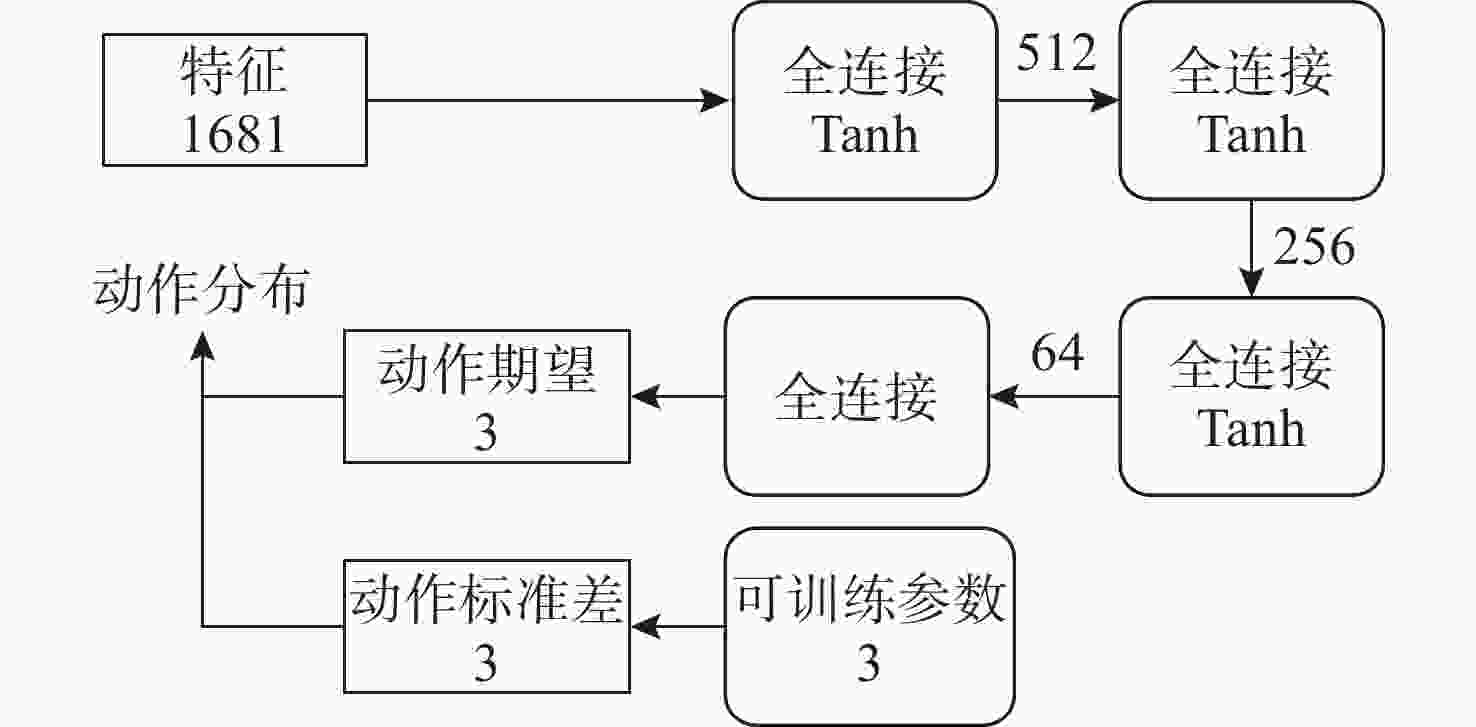

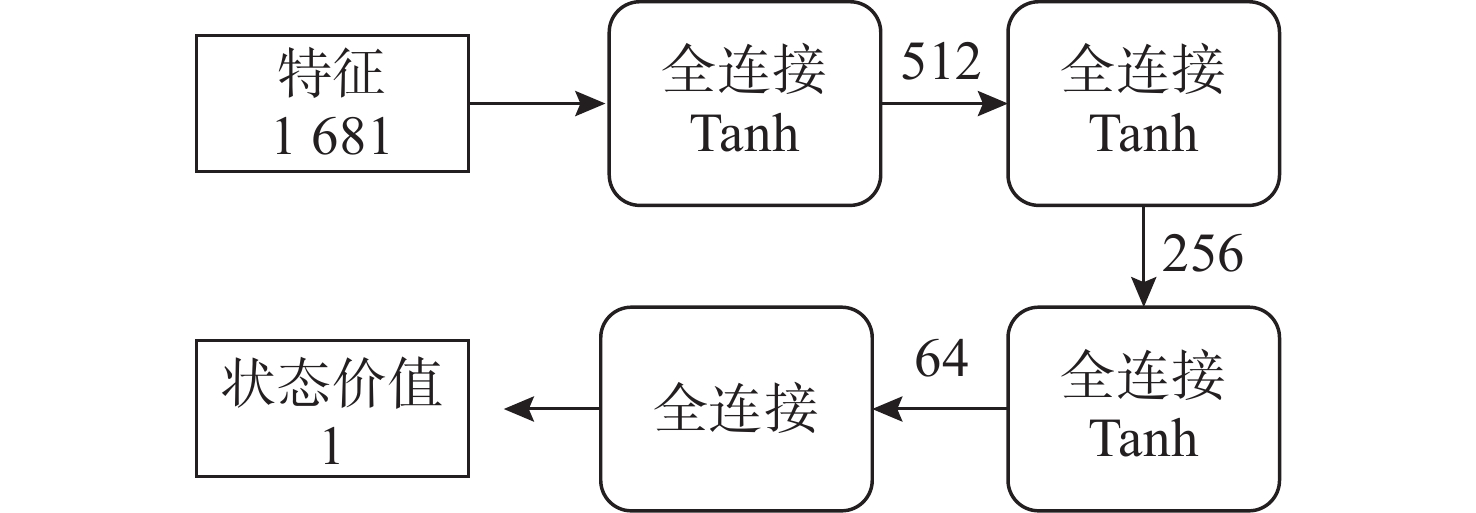

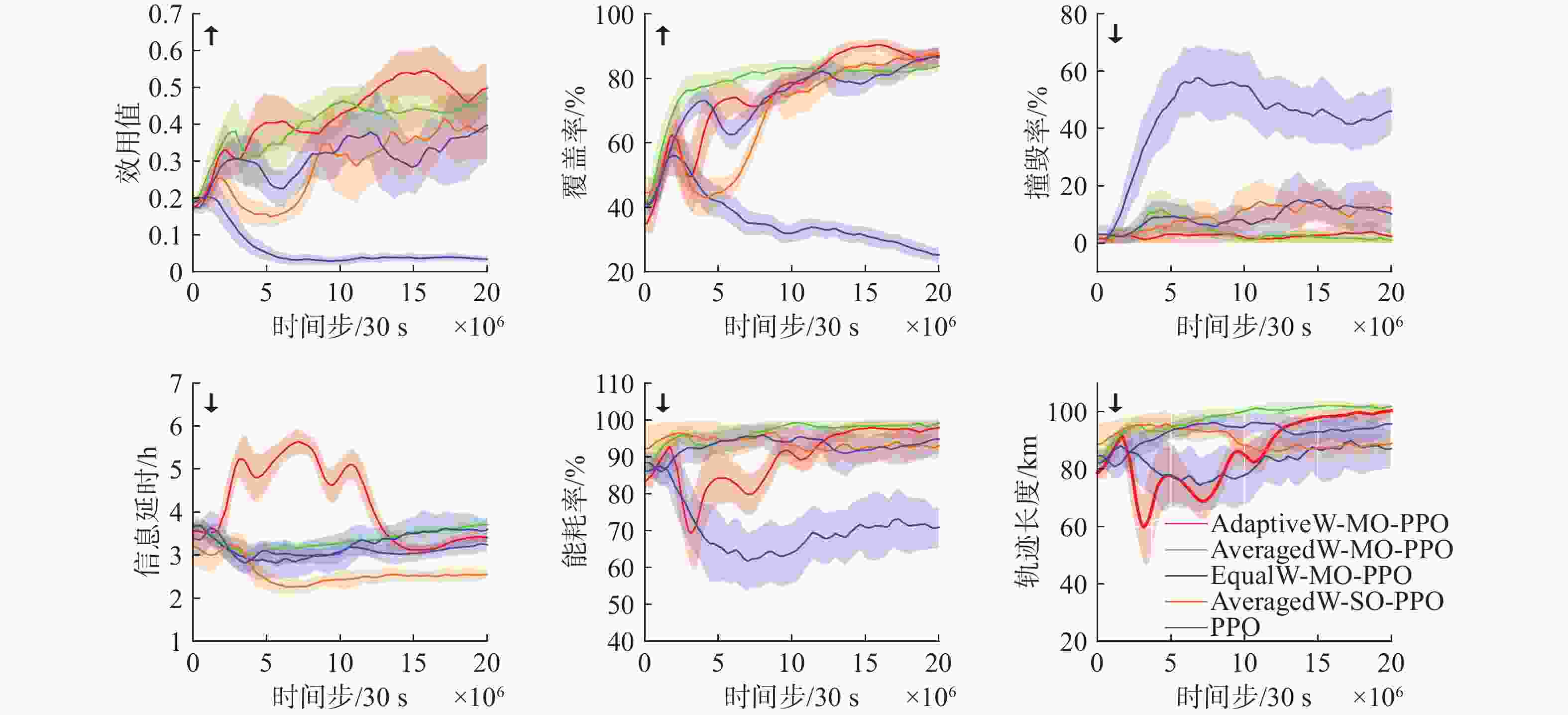

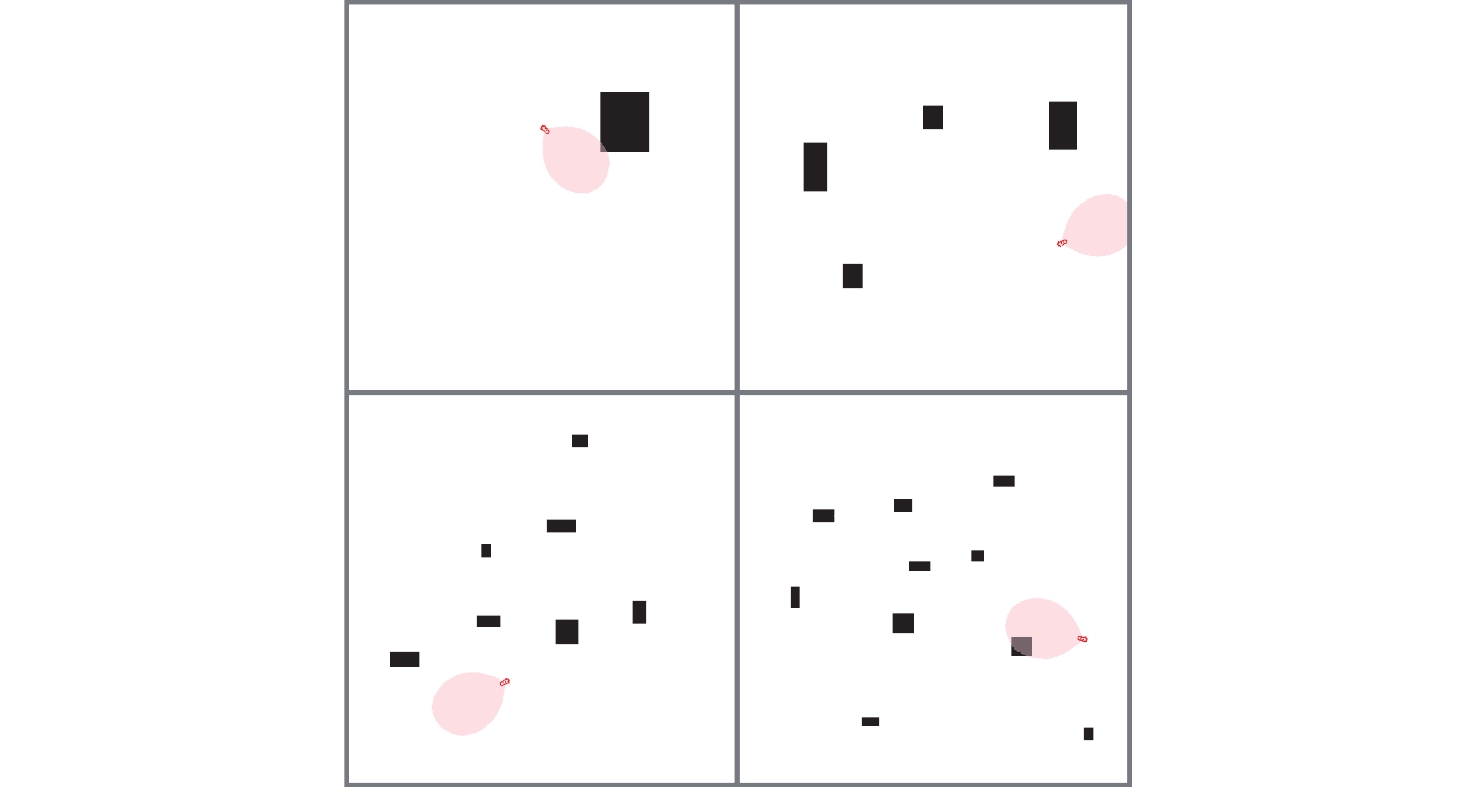

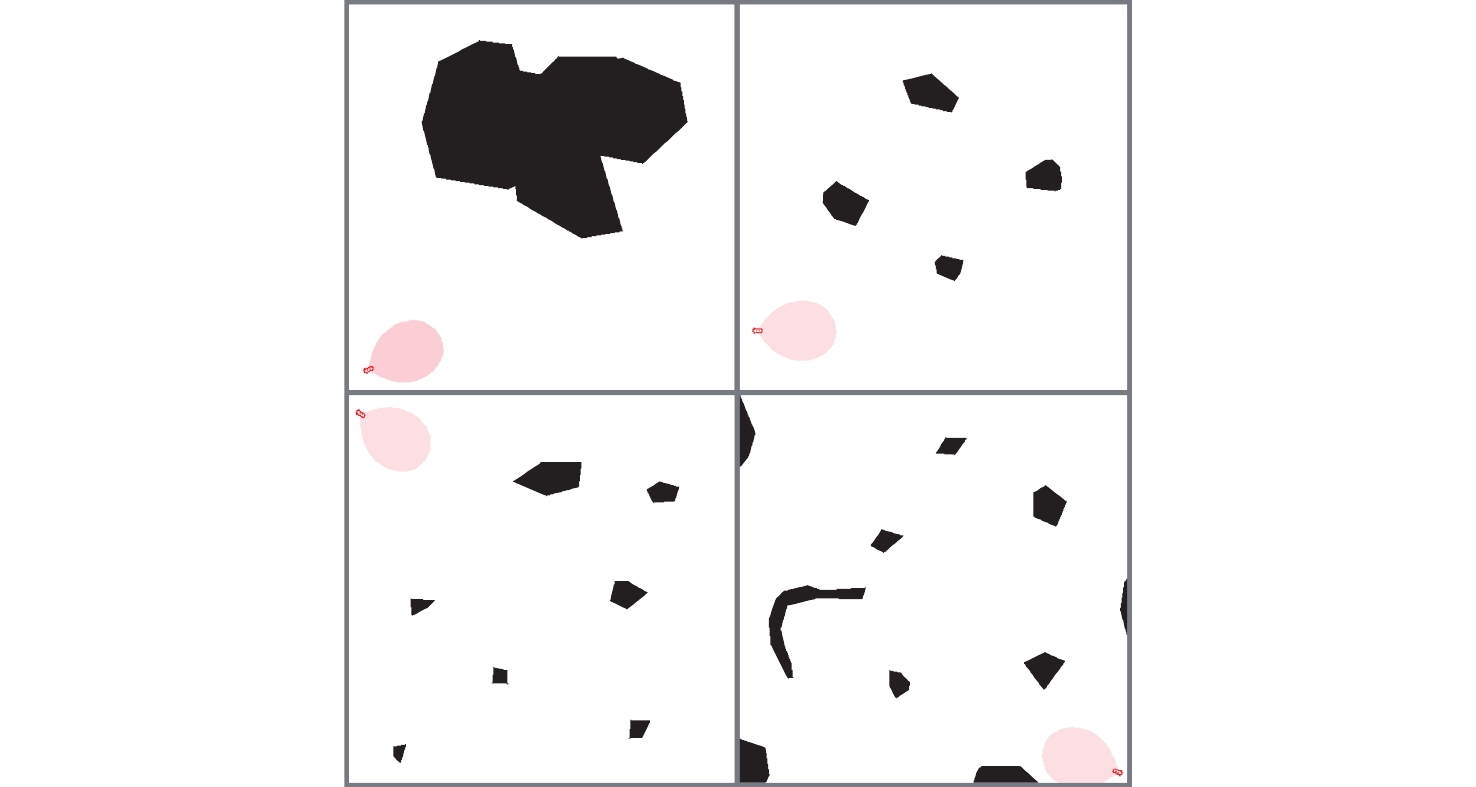

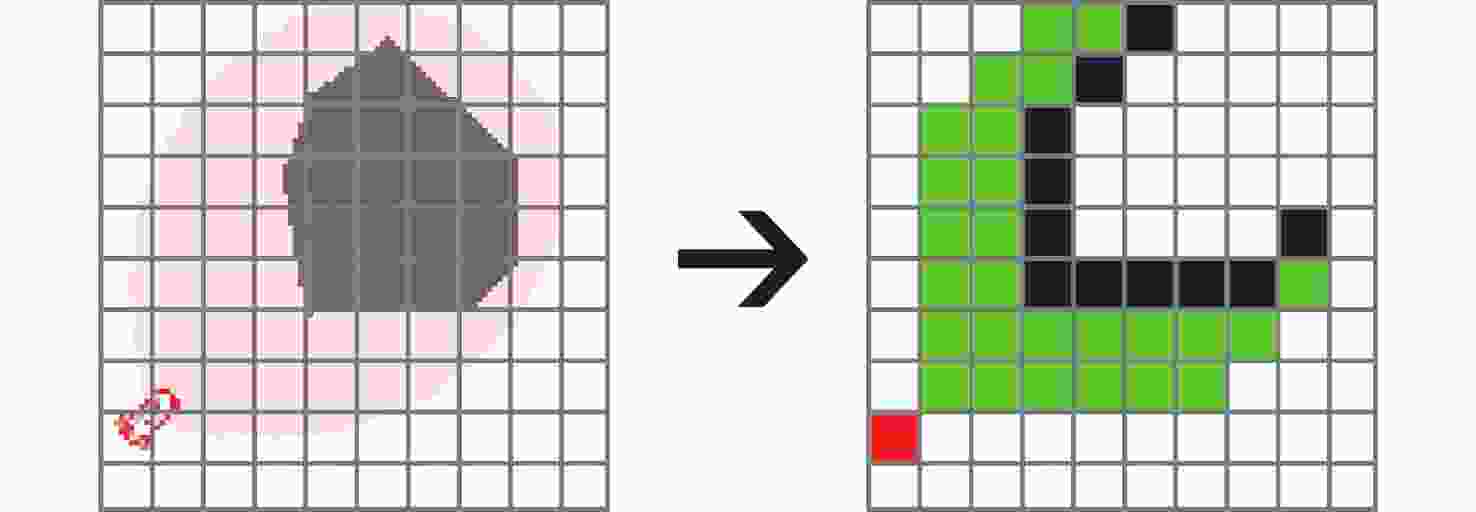

摘要: 全覆盖路径规划作为无人水下航行器(UUV)在未知水域环境中的一项关键任务,受环境不确定性、运动约束和能耗限制等因素影响, 传统路径规划方法难以适应复杂场景。文中提出了一种基于自适应多目标优化的UUV全覆盖路径规划方法, 结合近端优化强化学习算法与动态权重调节机制, 通过奖励目标的相关性分析与线性回归估计, 自适应调整不同优化目标的权重, 使UUV能够在未知障碍物和洋流环境中自主规划高效的覆盖路径。为验证方法的有效性, 构建了一个基于二维仿真环境的UUV运动与声呐探测模型, 其中UUV运动模型在6自由度刚体运动的基础上简化为平面运动, 并在多种障碍物分布与随机洋流条件下进行了对比实验分析。实验结果表明, 相较于传统方法, 该方法能够在提高覆盖率的同时优化任务完成率、轨迹长度、能耗与信息延时等关键指标。其中, 覆盖率提升4.03%, 任务完成率提高10%, 效用值提升10.96%, 任务完成时间缩短14.13%, 轨迹长度减少26.85%, 能耗降低10.3%, 信息延时减少19.34%。结果证明该方法能够在复杂环境中显著提升UUV的适应性和鲁棒性, 为自主水下探测任务提供了新的优化策略参考。Abstract: Coverage path planning for unmanned undersea vehicles(UUVs) in unknown aquatic environments is a critical task. However, due to environmental uncertainties, motion constraints, and energy limitations, traditional path planning methods struggle to adapt to complex scenarios. This paper proposed an adaptive multi-objective optimization-based coverage path planning method for UUVs, integrating proximal policy optimization(PPO) with a dynamic weight adjustment mechanism. By analyzing the correlation between reward objectives and employing linear regression estimation, the proposed approach adaptively adjusted the weights of different optimization objectives, enabling UUVs to autonomously plan efficient coverage paths in environments with unknown obstacles and ocean currents. To validate the effectiveness of the proposed method, a UUV motion and sonar detection model based on a two-dimensional simulation environment was constructed. Among them, the UUV motion model was simplified to a planar motion model on the basis of the six-degree-of-freedom rigid-body motion. Comparative experiments were conducted under various obstacle distributions and random ocean currents. Experimental results demonstrate that compared with traditional methods, the proposed approach improves coverage while optimizing mission completion rate, trajectory length, energy consumption, and information latency. Specifically, it increases coverage by 4.03%, enhances mission completion rate by 10%, improves utility by 10.96%, reduces mission completion time by 14.13%, shortens trajectory length by 26.85%, lowers energy consumption by 10.3%, and decreases information latency by 19.34%. These results indicate that the proposed method significantly enhances the adaptability and robustness of UUVs in complex environments, providing a novel optimization strategy for autonomous underwater exploration tasks.

-

表 1 各目标平均权重

Table 1. Average weight of each objective

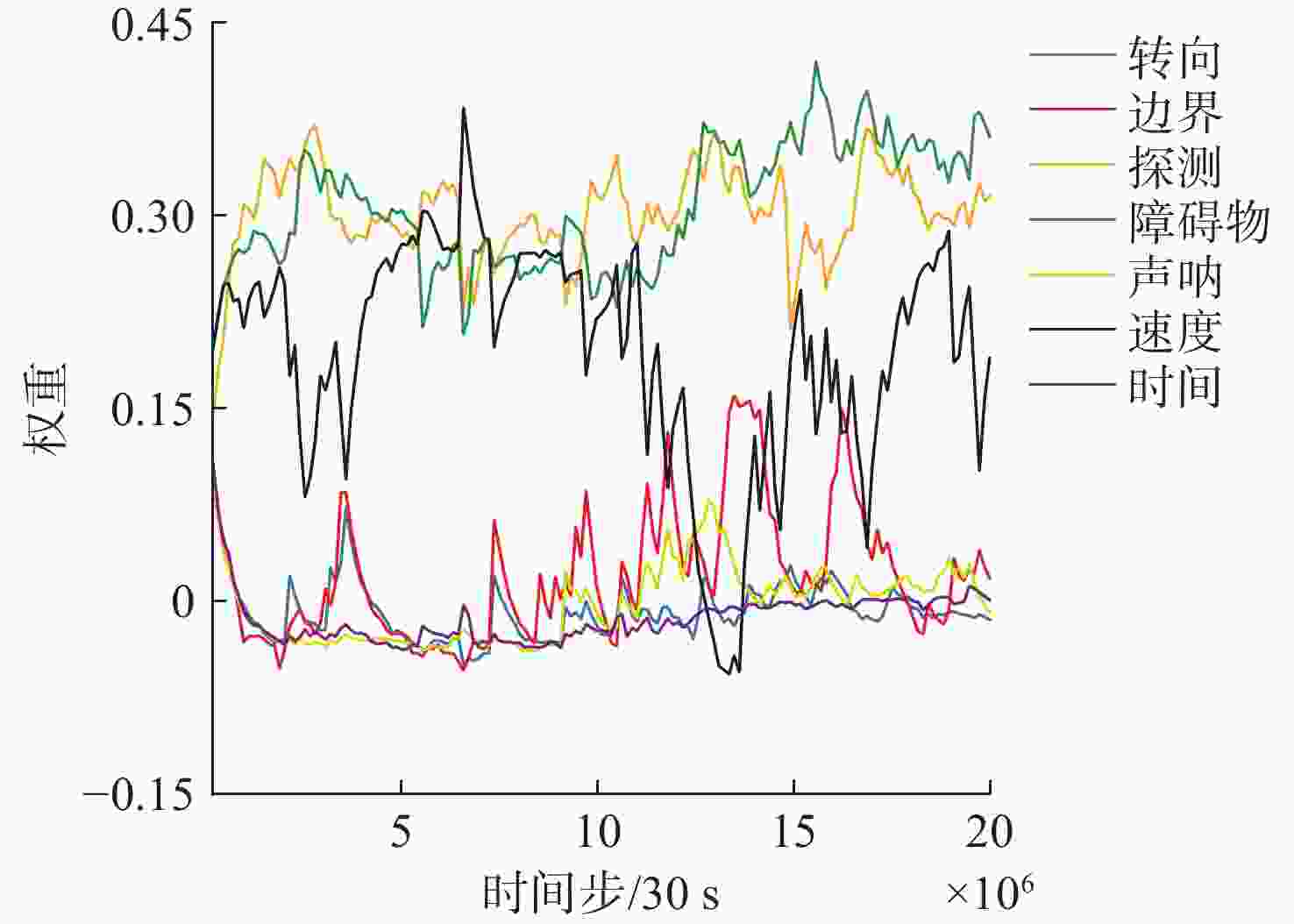

目标 平均权重 时间 −0.013 359 声呐 −0.002 343 探测 0.301 260 边界 0.020 247 障碍物 0.307 023 速度 0.194 401 转向 −0.007 376 表 2 矩形障碍物场景下测试结果

Table 2. Test results in rectangular obstacle scenarios

方法 障碍物是

否已知覆盖

率/%↑碰撞

率/%↓轨迹长度/

km↓能耗

率/%↓信息

延时/h↓效用

值↑任务完

成率/%↑任务完成

时间/h↓AdaptiveW-MO-PPO 是 96.66 0 65.11 70.23 1.73 0.71 100 4.20 否 97.15 0 62.42 67.4 1.69 0.72 100 4.02 AveragedW-MO-PPO 是 94.30 10 67.12 71.45 1.72 0.62 85 4.32 否 94.74 10 67.37 71.89 1.75 0.61 85 4.38 EqualW-MO-PPO 是 55.18 45 — — — 0.15 0 — 否 40.86 65 — — — 0.09 0 — AveragedW-SO-PPO 是 79.55 10 78.36 93.46 2.38 0.44 15 6.40 否 76.66 15 75.30 89.37 2.41 0.43 30 6.06 PPO 是 82.30 0 69.61 80.01 2.00 0.50 40 5.11 否 85.42 5 73.11 84.10 2.05 0.51 45 5.20 A* 是 93.20 0 90.61 79.99 2.12 0.65 95 5.00 表 3 不规则障碍物场景下测试结果

Table 3. Test results in irregular obstacle scenarios

方法 障碍物是

否已知覆盖

率/%↑碰撞

率/%↓轨迹

长度/km↓能耗

率/%↓信息

延时/h↓效用

值↑任务完

成率/%↑任务完成

时间/h↓AdaptiveW-MO-PPO 是 96.69 0 65.92 71.62 1.65 0.71 100 4.29 否 96.84 0 68.47 73.70 1.81 0.71 100 4.46 AveragedW-MO-PPO 是 94.49 0 69.65 74.43 1.83 0.67 90 4.53 否 95.02 0 68.73 73.27 1.79 0.68 90 4.48 EqualW-MO-PPO 是 56.11 25 — — — 0.19 0 — 否 38.17 75 — — — 0.06 0 — AveragedW-SO-PPO 是 73.96 0 83.21 98.16 2.45 0.43 5 6.58 否 74.17 5 73.33 86.84 2.47 0.42 20 5.86 PPO 是 76.88 0 73.80 84.99 2.04 0.44 15 5.54 否 79.92 0 73.07 83.95 2.12 0.47 15 5.77 A* 是 92.09 0 88.55 78.18 2.07 0.63 85 4.89 表 4 示例的性能指标

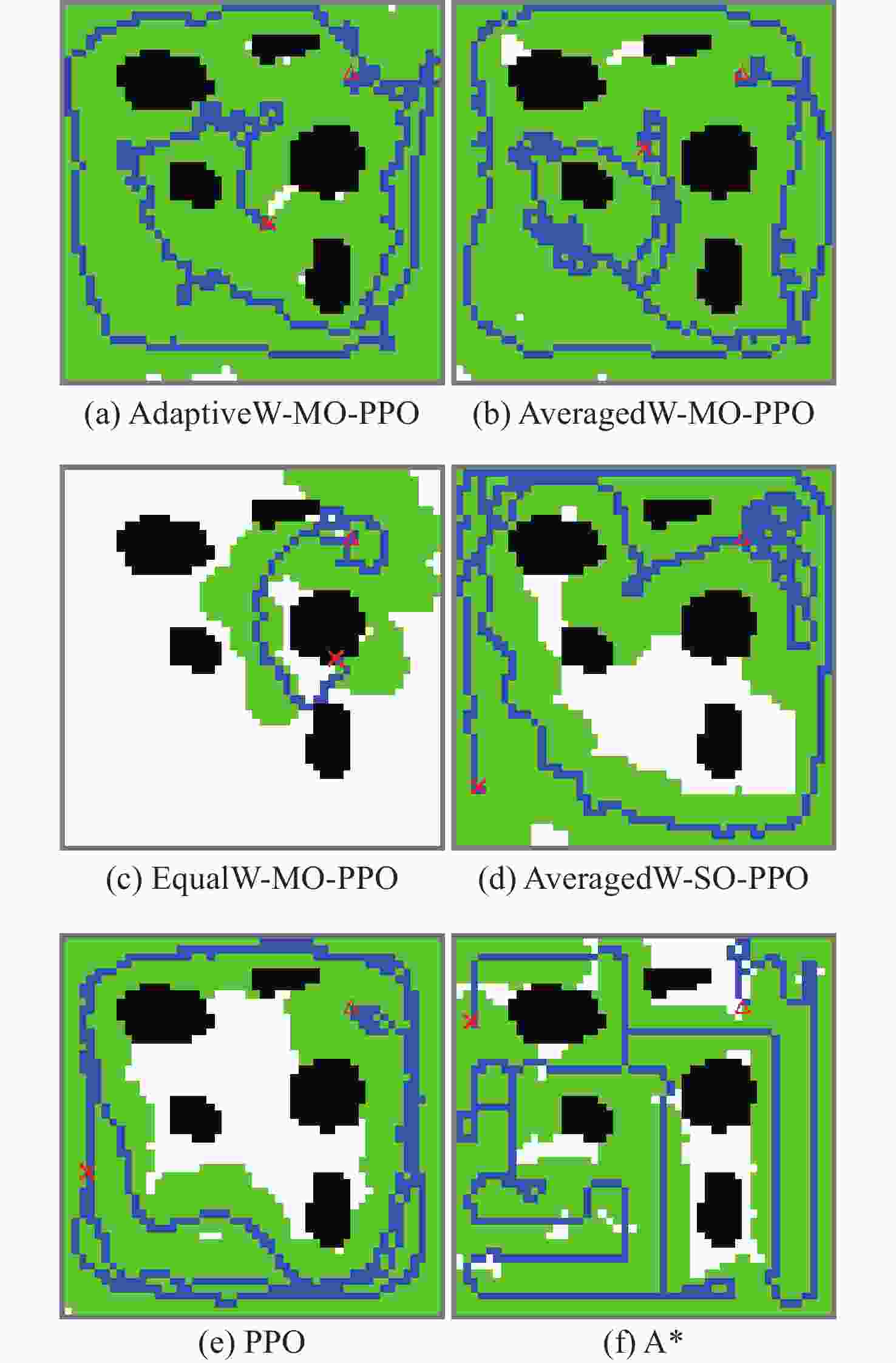

Table 4. Performance indicators of the example

方法 覆盖

率/%↑是否

碰撞轨迹

长度/km↓能耗

率/%↓信息

延时/h↓效用

值 ↑任务完成

时间/h↓AdaptiveW-

MO-PPO99.06 否 76.69 71.16 1.43 0.79 4.28 AveragedW-

MO-PPO98.83 否 94.87 83.49 2.24 0.75 5.14 EqualW-

MO-PPO15.38 是 — — — 0 — AveragedW-

SO-PPO80.62 否 84.36 — — 0.46 — PPO 76.62 否 87.40 — — 0.41 — A* 86.38 否 79.69 — — 0.58 — -

[1] 陈昭, 丁一杰, 张治强. 无人潜航器发展历程及运用优势研究[J]. 舰船科学技术, 2024, 46(23): 98-102.CHEN Z, DING Y J, ZHANG Z Q. Research on the development history and application advantages of unmanned underwater vehicle[J]. Ship Science and Technology, 2024, 46(23): 98-102. [2] 延远航. 无人水下航行器运动控制研究[D]. 太原: 中北大学, 2024. [3] 张翔鸢, 花吉. 国外超大型无人潜航器发展与运用研究[J]. 中国舰船研究, 2024, 19(5): 17-27.ZHANG X Y, HUA J. Study on the development and application of foreign extra-largeunmanned underwater vehicles[J]. Chinese Journal of Ship Research, 2024, 19(5): 17-27. [4] CHENG C, SHA Q, HE B, et al. Path planning and obstacle avoidance for AUV: A review[J]. Ocean Engineering, 2021, 235: 109355. doi: 10.1016/j.oceaneng.2021.109355 [5] ZENG Z, SAMMUT K, LIAN L, et al. A comparison of optimization techniques for AUV path planning in environments with ocean currents[J]. Robotics and Autonomous Systems, 2016, 82: 61-72. doi: 10.1016/j.robot.2016.03.011 [6] REPOULIAS F, PAPADOPOULOS E. Planar trajectory planning and tracking control design for underactuated AUVs[J]. Ocean Engineering, 2007, 34(11-12): 1650-1667. doi: 10.1016/j.oceaneng.2006.11.007 [7] YU H, WANG Y. Multi-objective AUV path planning in large complex battlefield environments[C]//2014 Seventh International Symposium on Computational Intelligence and Design. Hangzhou, China: IEEE, 2014: 345-348. [8] TAN C S, MOHD-MOKHTAR R, ARSHAD M R. A comprehensive review of coverage path planning in robotics using classical and heuristic algorithms[J]. IEEE Access, 2021, 9: 119310-42. doi: 10.1109/ACCESS.2021.3108177 [9] GAMMELL J D, SRINIVASA S S, BARFOOT T D. Informed RRT*: Optimal sampling-based path planning focused via direct sampling of an admissible ellipsoidal heuristic[C]//2014 IEEE/RSJ International Conference on Intelligent Robots and Systems. Chicago, USA: IEEE, 2014: 2997-3004. [10] TORRES M, PELTA D A, VERDEGAY J L, et al. Coverage path planning with unmanned aerial vehicles for 3D terrain reconstruction[J]. Expert Systems with Applications, 2016, 55: 441-451. doi: 10.1016/j.eswa.2016.02.007 [11] GABRIELY Y, RIMON E. Spanning-tree based coverage of continuous areas by a mobile robot[J]. Annals of Mathematics and Artificial Intelligence, 2001, 31: 77-98. doi: 10.1023/A:1016610507833 [12] HUANG W H. Optimal line-sweep-based decompositions for coverage algorithms[C]//Proceedings 2001 ICRA. IEEE International Conference on Robotics and Automation. Seoul, Korea(South): IEEE, 2001, 1: 27-32. [13] KYAW P T, PAING A, THU T T, et al. Coverage path planning for decomposition reconfigurable grid-maps using deep reinforcement learning based travelling salesman problem[J]. IEEE Access, 2020, 8: 225945-56. doi: 10.1109/ACCESS.2020.3045027 [14] HEYDARI J, SAHA O, GANAPATHY V. Reinforcement learning-based coverage path planning with implicit cellular decomposition[EB/OL]. [2025-4-14]. https://arxiv.org/abs/2110.09018. [15] AI B, JIA M, XU H, et al. Coverage path planning for maritime search and rescue using reinforcement learning[J]. Ocean Engineering, 2021, 241: 110098. doi: 10.1016/j.oceaneng.2021.110098 [16] RÜCKIN J, JIN L, POPOVIĆ M. Adaptive informative path planning using deep reinforcement learning for UAV-based active sensing[C]//2022 International Conference on Robotics and Automation. Philadelphia, USA: IEEE, 2022: 4473-4479. [17] ZHAO Y, SUN P, LIM C G. The simulation of adaptive coverage path planning policy for an underwater desilting robot using deep reinforcement learning[C]//International Conference on Robot Intelligence Technology and Applications. Cham, Switzerland: Springer International Publishing, 2022: 68-75. [18] XING B, WANG X, YANG L, et al. An algorithm of complete coverage path planning for unmanned surface vehicle based on reinforcement learning[J]. Journal of Marine Science and Engineering, 2023, 11(3): 645. doi: 10.3390/jmse11030645 [19] JONNARTH A, ZHAO J, FELSBERG M. Learning coverage paths in unknown environments with deep reinforcement learning[C]//International Conference on Machine Learning. Vienna, Austria: PMLR, 2024: 22491-508. [20] GRONDMAN I, BUSONIU L, LOPES G A D, et al. A survey of Actor-Critic reinforcement learning: Standard and natural policy gradients[J]. IEEE Transactions on Systems, Man, and Cybernetics, Part C(Applications and Reviews), 2012, 42(6): 1291-307. doi: 10.1109/TSMCC.2012.2218595 [21] VAN MOFFAERT K, DRUGAN M M, NOWÉ A. Scalarized multi-objective reinforcement learning: Novel design techniques[C]//2013 IEEE Symposium on Adaptive Dynamic Programming and Reinforcement Learning. Singapore: IEEE, 2013: 191-199. [22] REYMOND M, HAYES C F, STECKELMACHER D, et al. Actor-Critic multi-objective reinforcement learning for non-linear utility functions[J]. Autonomous Agents and Multi-Agent Systems, 2023, 37(2): 23. doi: 10.1007/s10458-023-09604-x [23] FOSSEN T I. Handbook of marine craft hydrodynamics and motion control[M]. Hoboken, USA: John Willy & Sons Ltd, 2011. [24] WANG Z, DU J, JIANG C, et al. Task scheduling for distributed AUV network target hunting and searching: An energy-efficient AoI-aware DMAPPO approach[J]. IEEE Internet of Things Journal, 2022, 10(9): 8271-85. -

下载:

下载: