Three-Dimensional Path Planning of AUVs in Dynamic Obstacle Environments

-

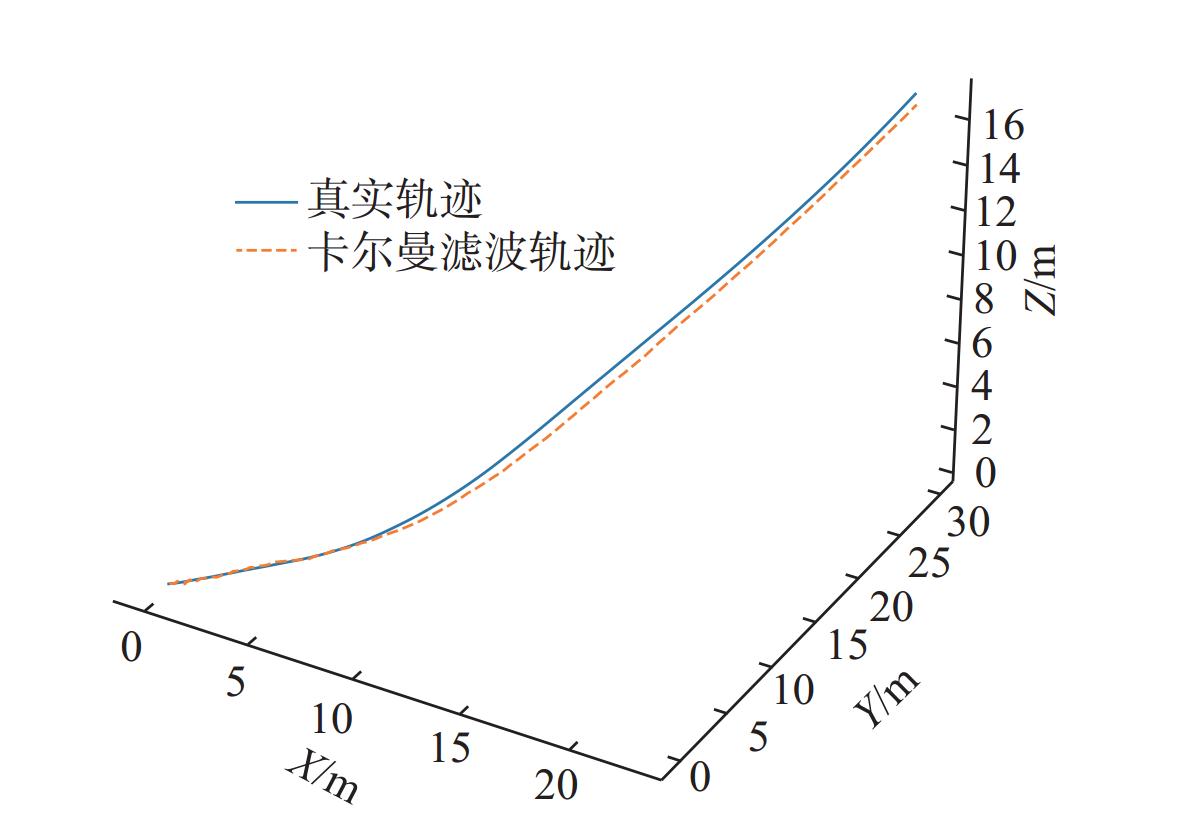

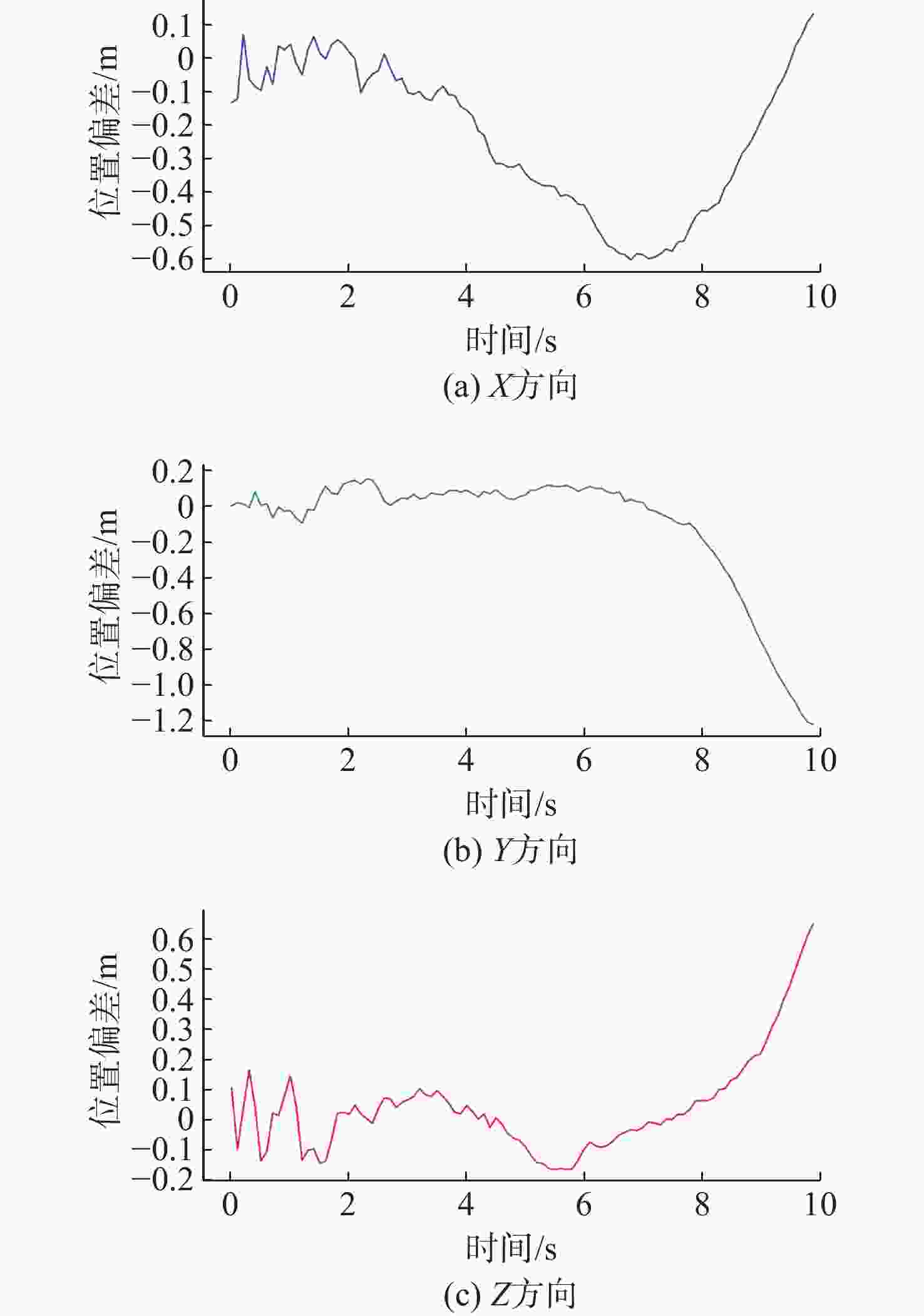

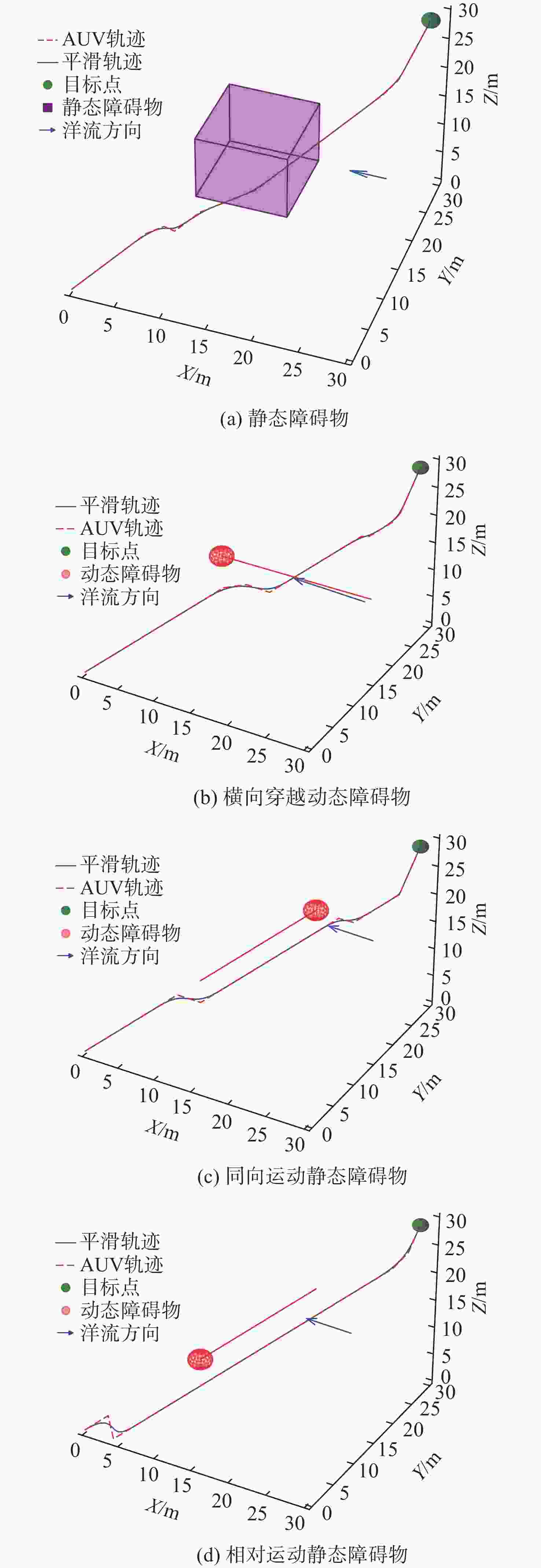

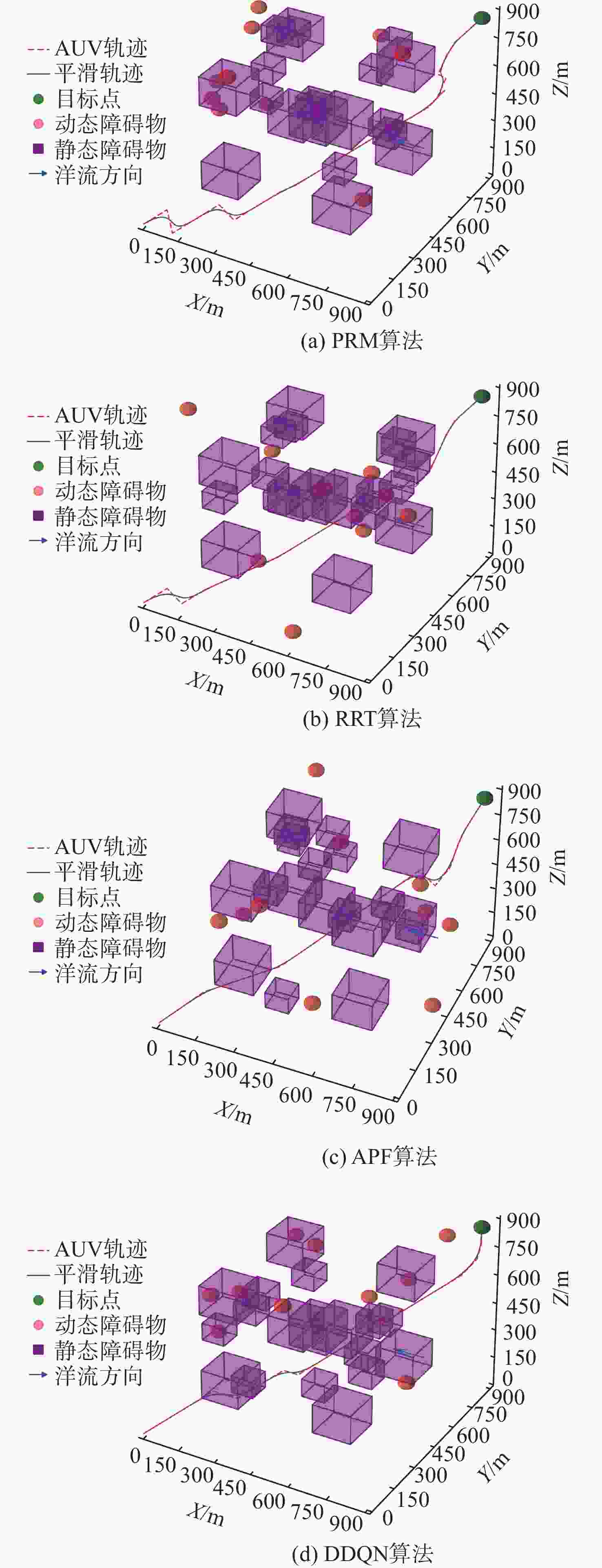

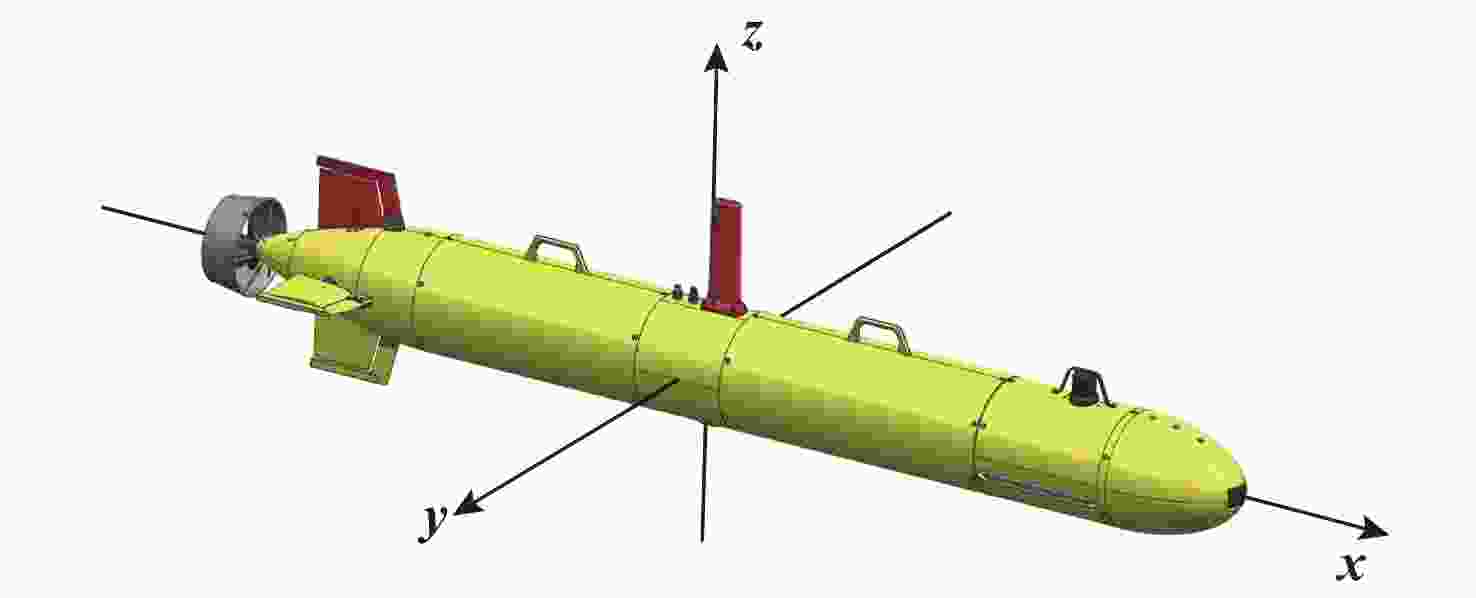

摘要: 传统算法在自主水下航行器(AUV)面临的高维空间和动态障碍物环境中存在计算负担大、精度不足问题。针对复杂三维环境下动态障碍物对AUV路径规划的挑战, 文中提出一种基于改进的双重深度Q网络(DDQN)的AUV三维路径规划方法。通过网络结构优化和高效奖励函数设计, 显著提升了AUV路径规划效率和精度。此外, 引入了动态障碍物运动轨迹建模, 采用辛格模型和卡尔曼滤波算法实现障碍物状态的精确预测, 增强了AUV的动态避障能力。同时, 采用Basis样条函数对路径进行平滑处理, 改善了AUV航行路径的连续性与稳定性。仿真与实验结果表明, 所提方法在复杂动态环境下能够有效避免碰撞, 实时规划出安全且高效的航行路径。相比传统方法, DDQN算法在路径长度、避障成功率及计算效率方面均展现出明显优势, 有效解决了动态障碍环境中AUV的三维路径规划问题。Abstract: Traditional algorithms suffer from heavy computational burden and insufficient accuracy in the high-dimensional space and dynamic obstacle environment faced by autonomous underwater vehicles(AUVs). To overcome the challenges posed by dynamic obstacles to AUV path planning in complex three-dimensional environments, this study proposed a three-dimensional path planning method for AUVs based on an enhanced double deep Q-network(DDQN). By optimizing the network architecture and designing an efficient reward function, the AUV path planning efficiency and accuracy were significantly improved. Moreover, dynamic obstacle trajectories were modeled, and the Singer model, combined with the Kalman filter algorithm, was used to precisely predict obstacle states, thereby enhancing the dynamic obstacle avoidance capabilities of AUVs. Additionally, Basis spline functions were utilized to smooth the paths, thereby improving the path continuity and stability of AUVs. Simulation and experimental results demonstrate that the proposed approach effectively avoids collisions in complex dynamic environments and achieves real-time planning of safe and efficient paths. Compared to traditional methods, the DDQN algorithm shows significant advantages in terms of path length, obstacle avoidance success rate, and computational efficiency, effectively addressing the challenges associated with three-dimensional path planning of AUVs in dynamic obstacle environments.

-

表 1 避障试验参数

Table 1. Test parameters of obstacle avoidance

试验

编号障碍物

类型障碍物位置

/运动方向AUV起始

位置AUV目标

位置AUV初始

航向角/(°)1 静态 (15, 15, 15) (0, 0, 0) (30, 30, 30) 45 2 动态 横向穿越 (0, 0, 0) (30, 30, 30) 45 3 动态 同向运动 (0, 0, 0) (30, 30, 30) 45 4 动态 相对运动 (0, 0, 0) (30, 30, 30) 45 表 2 DDQN网络超参数

Table 2. Network hyperparameters of DDQN

参数 符号 值 经验回放池容量 nm 5000 折扣因子 $\lambda $ 0.98 探索率 $\varepsilon $ 1.0 最小探索率 ${\varepsilon _{\min }}$ 0.05 探索率衰减 $ {\varepsilon _{{\mathrm{decay}}}} $ 0.99 步数惩罚因子 k1 0.6 洋流放缩因子 k2 0.4 表 3 不同算法试验结果比较

Table 3. Comparison of experimental results for different algorithms

算法 最小路径/m 平均路径/m 航行时间/s PRM 1945.82 2104.50 4195.67 RRT 2298.57 2421.77 4623.94 APF 1899.48 1959.98 3941.80 DDQN 1789.98 1859.98 3545.93 -

[1] 高剑, 张福斌. 无人水下航行器控制系统[M]. 西安: 西北工业大学出版社, 2018: 1-9. [2] 徐玉如, 李彭超. 水下机器人发展趋势[J]. 自然杂志, 2011, 33(3): 125-132, 2. [3] 钟宏伟. 国外无人水下航行器装备与技术现状及展望[J]. 水下无人系统学报, 2017, 25(4): 215-225.ZHONG H W. Review and prospect of equipment and techniques for unmanned undersea vehicle in foreign countries[J]. Journal of Unmanned Undersea Systems, 2017, 25(4): 215-225. [4] 潘光, 宋保维, 黄桥高, 等. 水下无人系统发展现状及其关键技术[J]. 水下无人系统学报, 2017, 25(1): 44-51.PAN G, SONG B W, HUANG Q G, et al. Development and key techniques of unmanned undersea system[J]. Journal of Unmanned Undersea Systems, 2017, 25(1): 44-51. [5] SHOJAEI K, CHATRAEI A. Robust platoon control of underactuated autonomous underwater vehicles subjected to nonlinearities, uncertainties and range and angle constraints[J]. Applied Ocean Research, 2021, 110: 102594. doi: 10.1016/j.apor.2021.102594 [6] AGHABABA M P, AMROLLAHI M H, BORJKHANI M. Application of GA, PSO, and ACO algorithms to path planning of autonomous underwater vehicles[J]. Journal of Marine Science and Application, 2012, 11: 378-386. doi: 10.1007/s11804-012-1146-x [7] DIJKSTRA E W. A note on two problems in connexion with graphs[J]. Numerische Mathematik, 1959, 1: 269-271. [8] HART P E, NILSSON N J, RAPHAEL B. A formal basis for the heuristic determination of minimum cost paths[J]. IEEE Transactions on Systems Science and Cybernetics, 1968, 4(2): 100-107. doi: 10.1109/TSSC.1968.300136 [9] KHATIB O. Real-time obstacle avoidance for manipulators and mobile robots[J]. The International Journal of Robotics Research, 1986, 5(1): 90-98. doi: 10.1177/027836498600500106 [10] DORIGO M, MANIEZZO V, COLORNI A. Ant system: Optimization by a colony of cooperating agents[J]. IEEE Transactions on Systems, Man, and Cybernetics, Part B(Cybernetics), 1996, 26(1): 29-41. doi: 10.1109/3477.484436 [11] LAVALLE S. Rapidly-exploring random trees: A new tool for path planning: Technical Report TR 98-11[R]. Iowa State: Iowa State University, Department of Computer Science, 1998. [12] KAVRAKI L E, SVESTKA P, LATOMBE J C, et al. Probabilistic roadmaps for path planning in high-dimensional configuration spaces[J]. IEEE Transactions on Robotics and Automation, 1996, 12(4): 566-580. doi: 10.1109/70.508439 [13] 朱蟋蟋, 孙兵, 朱大奇. 基于改进D*算法的AUV三维动态路径规划[J]. 控制工程, 2021, 28(4): 736-743. [14] ZHUANG Y, HUANG H, SHARMA S, et al. Cooperative path planning of multiple autonomous underwater vehicles operating in dynamic ocean environment[J]. ISA Transactions, 2019, 94: 174-186. doi: 10.1016/j.isatra.2019.04.012 [15] YAO X, WANG F, YUAN C, et al. Path planning for autonomous underwater vehicles based on interval optimization in uncertain flow fields[J]. Ocean Engineering, 2021, 234: 108675. doi: 10.1016/j.oceaneng.2021.108675 [16] YAN Z, ZHANG J, TANG J. Path planning for autonomous underwater vehicle based on an enhanced water wave optimization algorithm[J]. Mathematics and Computers in Simulation, 2021, 181: 192-241. doi: 10.1016/j.matcom.2020.09.019 [17] BHOPALE P, KAZI F, SINGH N. Reinforcement learning based obstacle avoidance for autonomous underwater vehicle[J]. Journal of Marine Science and Application, 2019, 18: 228-238. doi: 10.1007/s11804-019-00089-3 [18] CARLUCHO I, DE PAULA M, WANG S, et al. Adaptive low-level control of autonomous underwater vehicles using deep reinforcement learning[J]. Robotics and Autonomous Systems, 2018, 107: 71-86. doi: 10.1016/j.robot.2018.05.016 [19] LI Y, WANG Y, YU W, et al. Multiple autonomous underwater vehicle cooperative localization in anchor-free environments[J]. IEEE Journal of Oceanic Engineering, 2019, 44(4): 895-911. doi: 10.1109/JOE.2019.2935516 [20] WOO J, KIM N. Collision avoidance for an unmanned surface vehicle using deep reinforcement learning[J]. Ocean Engineering, 2020, 199: 107001. doi: 10.1016/j.oceaneng.2020.107001 [21] CHE G, YU Z. Neural-network estimators based fault-tolerant tracking control for AUV via ADP with rudders faults and ocean current disturbance[J]. Neurocomputing, 2020, 411: 442-454. doi: 10.1016/j.neucom.2020.06.026 [22] WU X, CHEN H, CHEN C, et al. The autonomous navigation and obstacle avoidance for USVs with ANOA deep reinforcement learning method[J]. Knowledge-Based Systems, 2020, 196: 105201. doi: 10.1016/j.knosys.2019.105201 [23] CHU Z, WANG F, LEI T, et al. Path planning based on deep reinforcement learning for autonomous underwater vehicles under ocean current disturbance[J]. IEEE Transactions on Intelligent Vehicles, 2022, 8(1): 108-120. -

下载:

下载: