Underwater Visual Object Tracking Method Based on Scene Perception

-

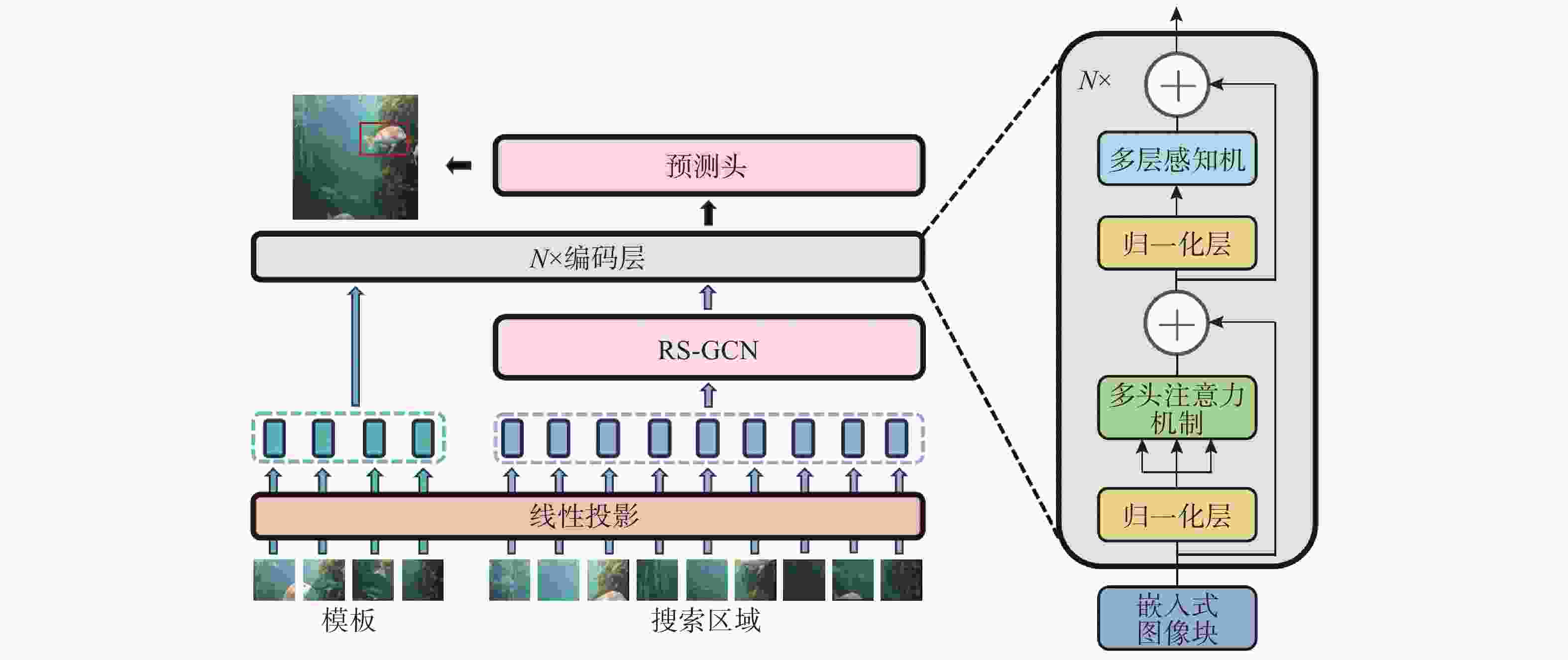

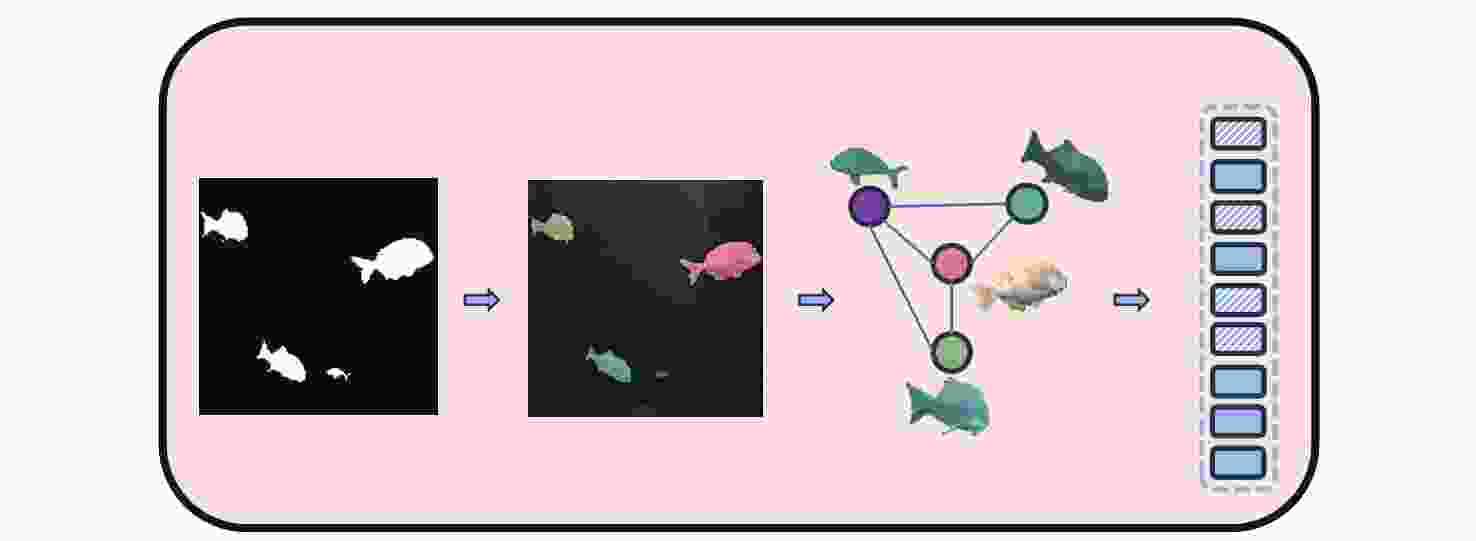

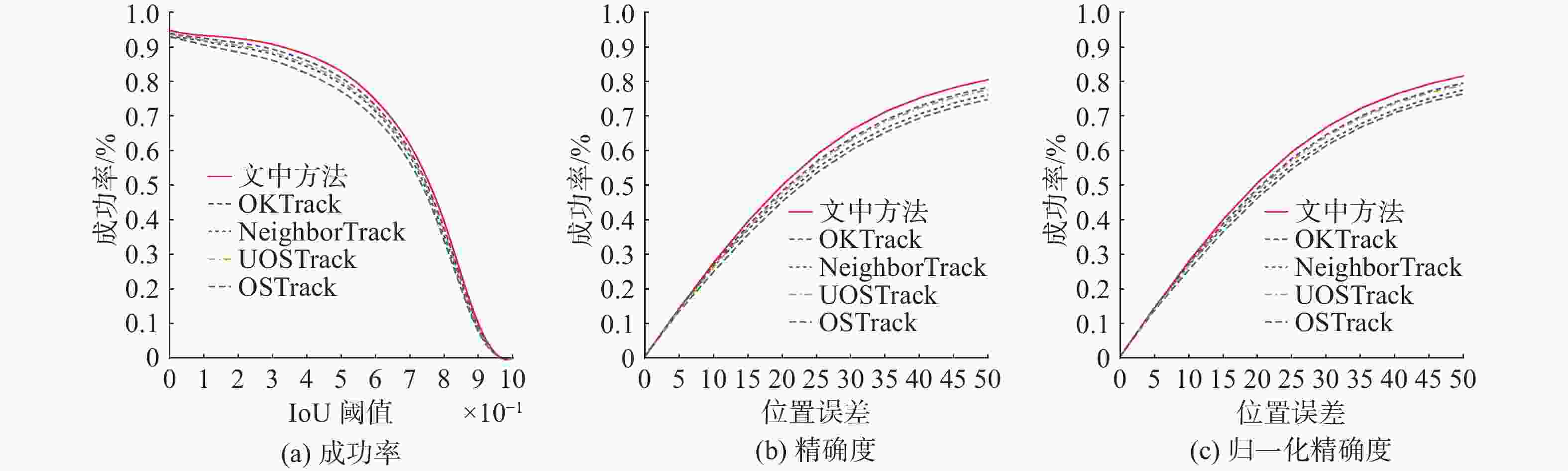

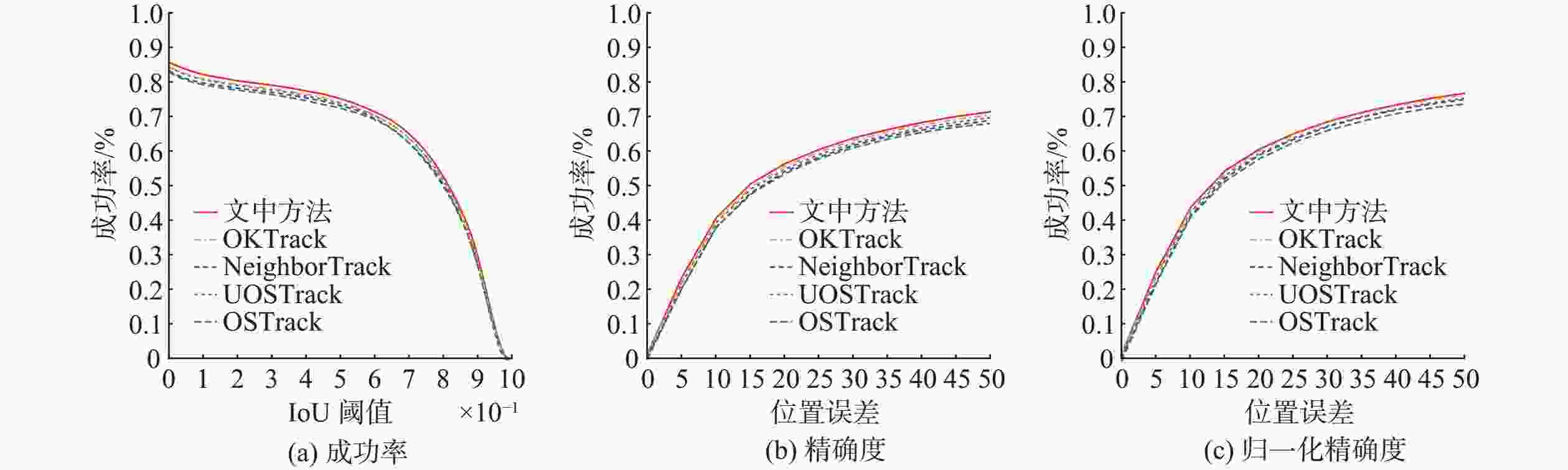

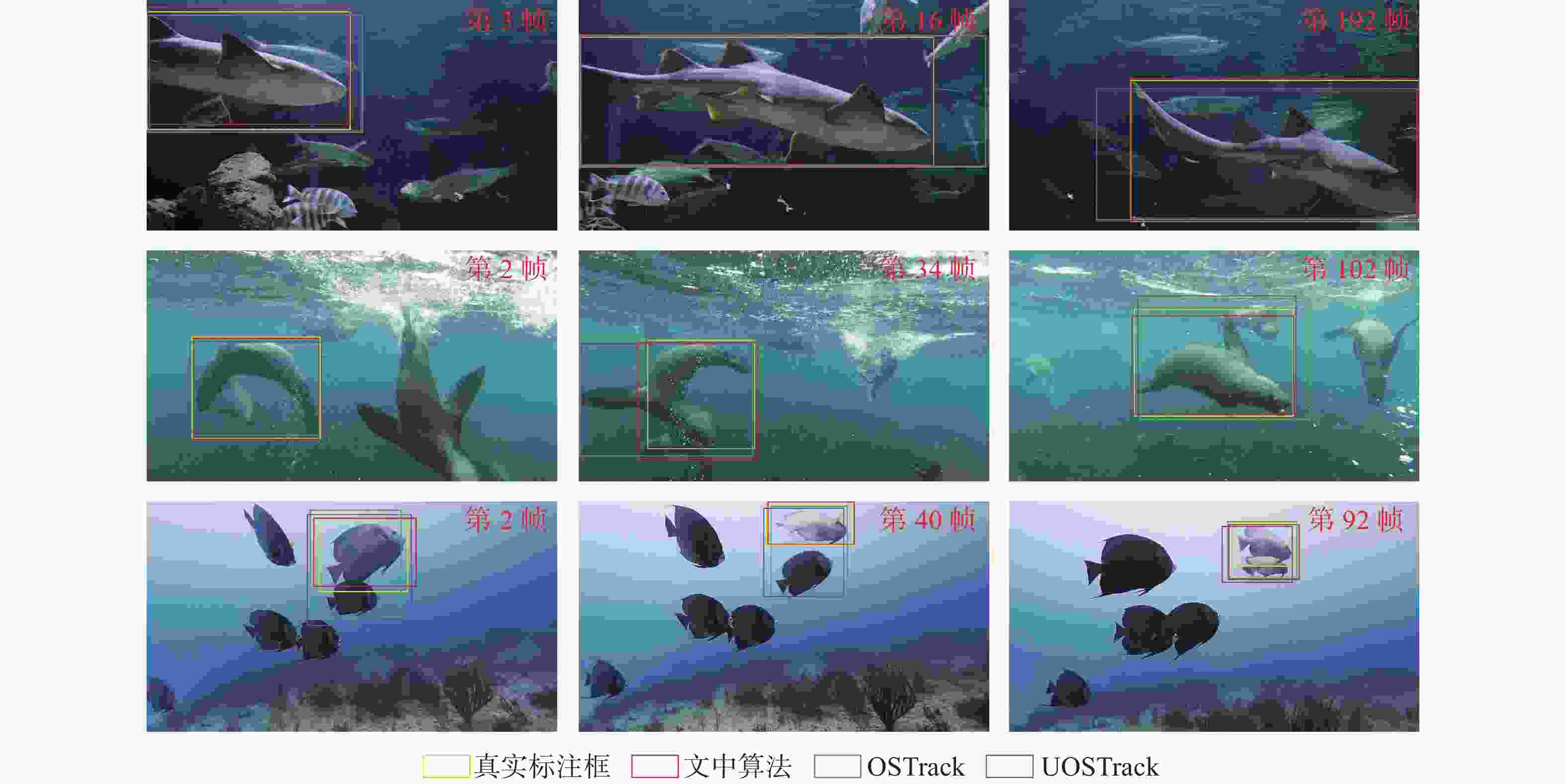

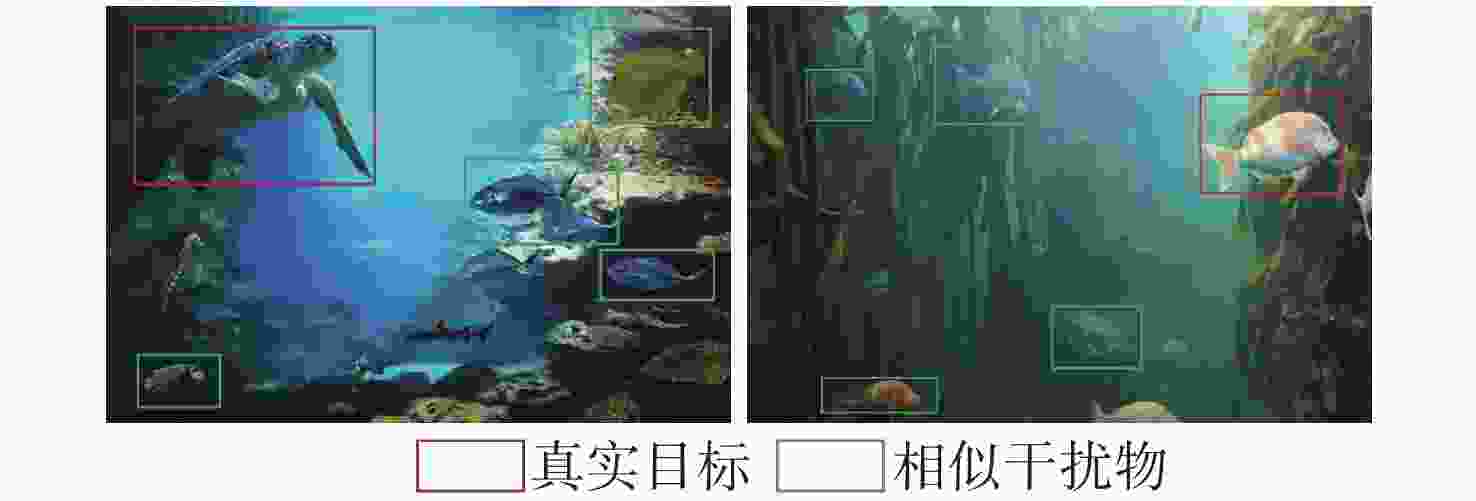

摘要: 水下视觉目标跟踪是自主水下航行器(AUV)场景理解的核心技术之一。然而, 复杂水下环境中的光照不均、背景干扰和目标外观变化等问题, 严重影响传统视觉目标跟踪方法的准确性和稳定性。现有方法主要依赖目标的表观建模, 难以实现复杂环境下可靠跟踪, 尤其是在相似目标干扰的情况下, 容易导致误识别和目标漂移。文中提出了一种基于场景感知的水下单目标跟踪方法, 通过基于区域分割的图卷积模块提取场景内所有目标区域, 并结合图卷积网络建模目标区域与周围关键区域的长距离依赖关系, 显著提升对相似目标的区分能力。此外, 文中引入双视图图对比学习策略, 通过生成随机扰动的目标特征视图, 实现图卷积模块的无监督在线更新, 使得模型能够在复杂环境下保持较强的适应性和稳定性。实验表明, 所提方法在跟踪精度和鲁棒性方面显著优于经典方法, 尤其在光照变化大、背景复杂和相似目标干扰较强的场景下, 成功率和精确度均有明显提升。这表明文中研究有效解决了水下目标跟踪中因光照变化和背景干扰导致的目标漂移问题, 能在相似目标存在时保持稳定跟踪, 为水下无人系统提供了高效可靠的目标跟踪解决方案。

-

关键词:

- 水下视觉 /

- 目标跟踪 /

- 场景感知; 图卷积网络; 双视图图对比学习

Abstract: Underwater visual object tracking is a core technology for scene understanding in autonomous undersea vehicle(AUV) systems. However, challenges such as uneven illumination, background interference, and target appearance variation in complex underwater environments severely affect the accuracy and stability of traditional visual tracking methods. Existing approaches primarily rely on the appearance modeling of the target, making them unreliable in complex environments, particularly when similar targets are present, leading to misidentification and tracking drift. This paper proposed an underwater single-object tracking method based on scene perception that utilized a regional segmentation-based graph convolution module to extract all target regions in the scene. By leveraging a graph convolutional network, the proposed method captured long-range dependencies between the target region and surrounding key regions, significantly enhancing the discrimination capability against similar targets. Additionally, a dual-view graph contrastive learning strategy was introduced, which enabled unsupervised online updates for the graph convolution module by generating randomly perturbed target feature views, ensuring strong adaptability and stability of the model in complex environments. Experiments show that the proposed method is significantly better than the classical method in terms of tracking accuracy and robustness, especially in scenes with large lighting changes, complex backgrounds, and strong interference of similar targets, and the success rate and accuracy are significantly improved. These results indicate that the proposed method effectively addresses target drift challenges in underwater object tracking caused by illumination variations and background interference, maintaining stable tracking even in the presence of similar targets, thus providing an efficient and reliable tracking solution for underwater unmanned systems. -

表 1 不同目标跟踪算法在UOT100和UTB180数据集上的评价指标对比

Table 1. Comparison of evaluation indexes of different object tracking algorithms on UOT100 and UTB180 datasets

跟踪器 来源 主干网络 UOT100 UTB180 成功率/% 精确度/% 归一化/% 成功率/% 精确度/% 归一化/% OSTrack ECCV2022 ViT-B 64.31 58.30 80.45 61.68 56.67 70.78 NeighborTrack CVPR2023 ViT-B 65.84 60.39 82.70 63.46 57.74 73.48 UOSTrack OCEAN2023 ViT-B 67.62 62.47 84.98 64.97 58.89 74.94 OKTrack NeurIPS2024 ViT-B 68.73 65.13 86.71 66.85 59.13 77.23 文中方法 — ViT-B 70.28 65.49 87.83 67.53 60.39 78.96 表 2 不同算法在UTB180数据集的实时性能对比

Table 2. Real-time performance comparison of different algorithms on UTB180 dataset

跟踪器 参数量 FLOPs FPS/(帧/s) OSTrack 83.1×106 16.7×109 83.1 NeighborTrack 85.3×106 18.3×109 85.2 UOSTrack 87.4×106 19.8×109 87.6 OKTrack 92.1×106 21.5×109 90.5 文中算法 84.2×106 17.9×109 91.3 表 3 UTB180数据集消融实验

Table 3. Ablation experiment table of UTB180 dataset

RS-GCN 双视图图对比

学习策略成功率/% 精确度/% 归一化/% − − 63.03 56.52 72.61 − √ 65.83 57.91 75.84 √ − 66.19 58.26 77.43 √ √ 67.53 60.39 78.96 -

[1] 吴晏辰, 王英民. 面向小样本数据的水下目标识别神经网络深层化研究[J]. 西北工业大学学报, 2022, 40(1): 40-46. doi: 10.3969/j.issn.1000-2758.2022.01.005WU Y C, WANG Y M. A research on underwater target recognition neural network for small samples[J]. Journal of Northwestern Polytechnical University, 2022, 40(1): 40-46. doi: 10.3969/j.issn.1000-2758.2022.01.005 [2] CAI L, MCGUIRE N E, HANLON R, et al. Semi-supervised visual tracking of marine animals using autonomous underwater vehicles[J]. International Journal of Computer Vision, 2023, 131(6): 1406-1427. [3] KHAN S, ULLAH I, ALI F, et al. Deep learning-based marine big data fusion for ocean environment monitoring: Towards shape optimization and salient objects detection[J]. Frontiers in Marine Science, 2023, 9: 1094915. [4] KATIJA K, ROBERTS P L D, DANIELS J, et al. Visual tracking of deepwater animals using machine learning-controlled robotic underwater vehicles[C]//Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision. Waikoloa, HI, USA: IEEE, 2021: 860-869. [5] YE B, CHANG H, MA B, et al. Joint feature learning and relation modeling for tracking: A one-stream framework[C]//European Conference on Computer Vision. Tel Aviv, Israel: Springer, 2022: 341-357. [6] CHEN Y H, WANG C Y, YANG C Y, et al. NeighborTrack: Single object tracking by bipartite matching with neighbor tracklets and its applications to sports[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Vancouver, Canada: IEEE, 2023: 5139-5148. [7] LI Y, WANG B, LI Y, et al. Underwater object tracker: UOSTrack for marine organism grasping of underwater vehicles[J]. Ocean Engineering, 2023, 285: 115449. [8] ZHANG C, LIU L, HUANG G, et al. WebUOT-1M: Advancing deep underwater object tracking with a million-scale benchmark[C]//The Thirty-Eight Conference on Neural Information Processing Systems Datasets and Benchmarks Track.Vancouver, Canada: NeurIPS, 2024. [9] KEZEBOU L, OLUDARE V, PANETTA K, et al. Underwater object tracking benchmark and dataset[C]//2019 IEEE International Symposium on Technologies for Homeland Security(HST). Woburn, Massachusetts, USA: IEEE, 2019: 1-6. [10] PANETTA K, KEZEBOU L, OLUDARE V, et al. Comprehensive underwater object tracking benchmark dataset and underwater image enhancement with GAN[J]. IEEE Journal of Oceanic Engineering, 2021, 47(1): 59-75. [11] ALAWODE B, GUO Y, UMMAR M, et al. UTB180: A high-quality benchmark for underwater tracking[C]//Proceedings of the Asian Conference on Computer Vision. Macao, China: Springer, 2022: 3326-3342. [12] ALAWODE B, DHAREJO F A, UMMAR M, et al. Improving underwater visual tracking with a large scale dataset and image enhancement[EB/OL]. (2023-08-30)[2025-03-20]. arXiv preprint arXiv: 2308.15816, 2023. [13] VASWANI, ASHISH, NOAM, et al. Attention is all you need[J]. Advances in Neural Information Processing Systems, 2017, 30: 5998-6008. [14] KUGARAJEEVAN J, KOKUL T, RAMANAN A, et al. Transformers in single object tracking: An experimental survey[J]. IEEE Access, 2023, 11: 80297-80326. [15] ZHANG Q, CAO R, SHI F, et al. Interpreting CNN knowledge via an explanatory graph[C]//Proceedings of the AAAI Conference on Artificial Intelligence. Hilton New Orleans Riverside, New Orleans, Louisiana, USA: AAAI, 2018. [16] SHI J, MALIK J. Normalized cuts and image segmentation[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2000, 22(8): 888-905. [17] WANG Y, SHEN X, YUAN Y, et al. Tokencut: Segmenting objects in images and videos with self-supervised transformer and normalized cut[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2023, 45(12): 15790-15801. [18] AHMED M, SERAJ R, ISLAM S M S. The K-means algorithm: A comprehensive survey and performance evaluation[J]. Electronics, 2020, 9(8): 1295. [19] GUPTA M R, CHEN Y. Theory and use of the EM algorithm[J]. Foundations and Trends in Signal Processing, 2011, 4(3): 223-296. [20] ZHU Y, XU Y, YU F, et al. Deep graph contrastive representation learning[EB/OL]. (2020-06-08) [2025-03-20]. arXiv preprint arXiv: 2006.04131, 2020. [21] HUANG L, ZHAO X, HUANG K. GOT-10k: A large high-diversity benchmark for generic object tracking in the wild[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2019, 43(5): 1562-1577. -

下载:

下载: