Visual Guidance Algorithm for AUV Recovery Based on CNN Object Tracking

-

摘要: 水下自主对接回收技术是解决自主水下航行器(AUV) 能源与信息传输问题, 增强无人系统水下探测、隐蔽能力的主要手段。文中针对真实环境笼式水下基站回收设计水下视觉信标导引方案, 提出一种改进的基于神经网络的检测-跟踪回收视觉导引算法: 首先利用水下基站数据集训练卷积神经网络, 进行目标检测; 随后利用改进跟踪算法结合位姿空间信息实现鲁棒跟踪; 最后通过改进 PnP-P3P位姿估计框架解决大偏移量下可观测信标灯数量过少的问题, 有效扩展水下视觉导引作业空间, 并通过作业空间仿真验证了灯阵设计与算法的有效性, 提出了相关有效作业空间指标。水池光学导引实验以及在湖上真实环境下结合超短基线进行的声光联合导引实验, 验证了改进检测-跟踪框架在工程上的可行性。Abstract: The development of autonomous undersea vehicle recovery technology is the main approach to solve problems pertaining to energy and information transmission and to enhance the underwater detection and concealment capabilities of unmanned systems. In this study, an underwater visual guidance scheme is designed for recovery with funnel-shaped docking stations in an actual environment. Additionally, an improved detect-by-tracking algorithm based on a convolutional neural network(CNN) is proposed. First, the CNN is trained using a docking station dataset to detect the target. Next, the improved tracking algorithm is combined with the position and attitude spatial information to achieve robust tracking. Finally, based on an improved PnP-P3P position and attitude estimation framework, the problem of insufficient observable beacons under a large offset is solved, and the underwater visual guidance workspace is effectively expanded. The beacon array design and algorithm are validated via workspace simulation, and relevant effective workspace indexes are proposed. An optical guidance experiment is performed in a pool, and acousto–optic joint guidance is performed based on an ultrashort baseline in an actual lake test. The feasibility of the proposed framework for engineering is confirmed by the results obtained.

-

表 1 水下基站数据集参数

Table 1. Dataset parameters of underwater station

名称 参数 图片大小 720×576 数据集图片数量/张 20 515 训练集图片数量/张 16 412 测试集图片数量/张 4 103 表 2 训练参数

Table 2. Parameters of training

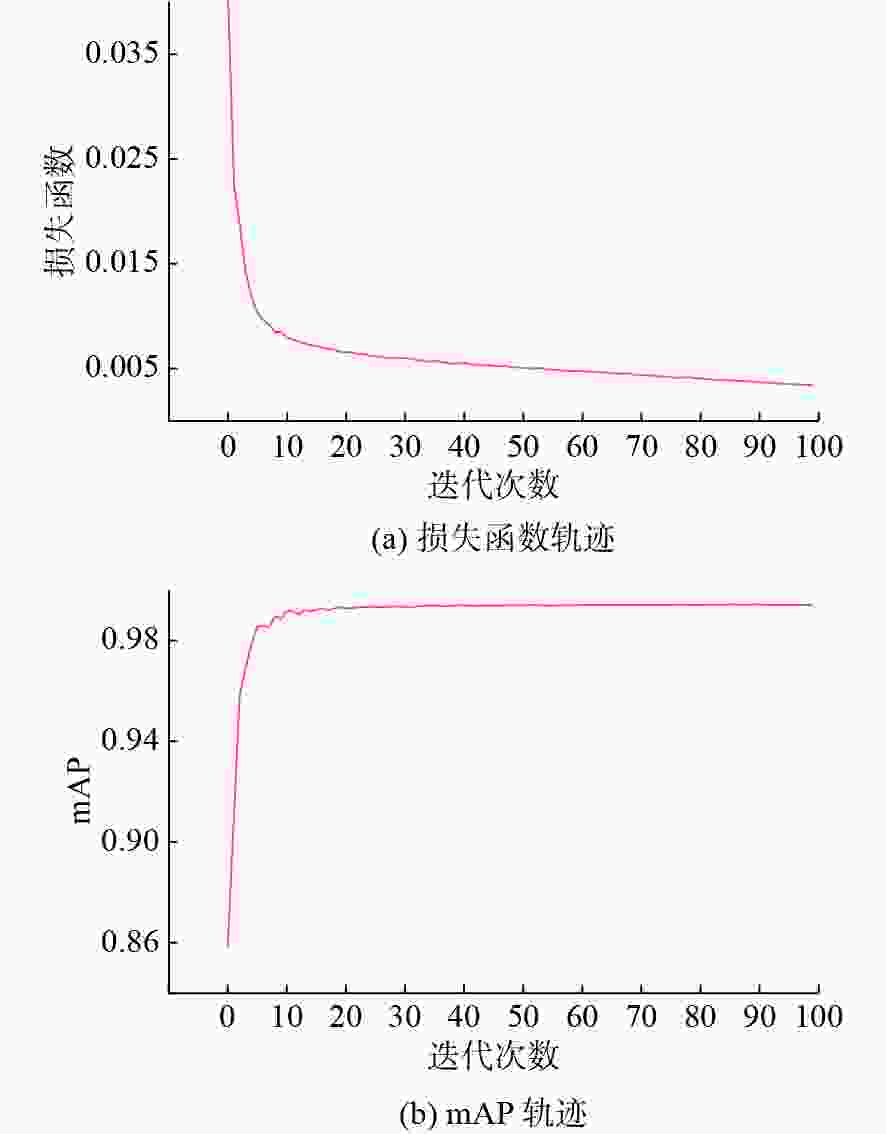

名称 参数 迭代次数/次 100 批大小/张 32 迭代器 SGD Mosaic图像增强 0.8 表 3 多目标跟踪性能指标

Table 3. Multi-objective tracking performance indexes

方法 测试集 漏检数 误判数 误匹

配数MOT

准确度/%SORT 原始 0 0 0 100.00 重投影跟踪 原始 0 0 0 100.00 SORT 遮挡 5 0 468 59.57 重投影跟踪 遮挡 0 0 4 99.65 表 4 不同信标数量下的长度观测裕量

Table 4. Length observation margins of different beacon numbers

信标数量 最大长度观测裕量/% 4 50.00 5 50.00 6 75.00 7 71.69 8 85.36 表 5 工作空间指标

Table 5. Workspace indexes

最小n点观测 Kn A100 A200 6 5 178. 66 414.65 267.83 3 6 337. 75 338.32 110.67 -

[1] 潘光, 宋保维, 黄桥高, 等. 水下无人系统发展现状及其关键技术[J]. 水下无人系统学报, 2017, 25(2): 44-51.Pan Guang, Song Bao-wei, Huang Qiao-gao, et al. Development and Key Techniques of Unmanned Undersea System[J]. Journal of Unmanned Undersea Systems, 2017, 25(2): 44-51. [2] Li Y, Jiang Y Q, Cui J, et al. AUV Docking Experiments Based on Vision Positioning Using Two Cameras[J]. Ocean Engineering, 2015, 110(1): 163-173. [3] Park J Y, Jun B H, Lee P M, et al. Experiments on Vision Guided Docking of an Autonomous Underwater Vehicle Using One Camera[J]. Ocean Engineering, 2009, 36(1): 48-61. doi: 10.1016/j.oceaneng.2008.10.001 [4] Palomeras N, Vallicrosa G, Mallios A, et al. AUV Homing and Docking for Remote Operations[J]. Ocean Engineering, 2018, 154(15): 106-120. [5] Liu S, Ozay M, Okatani T, et al. Detection and Pose Estimation for Short-range Vision-based Underwater Docking[J]. IEEE Access, 2018, 7: 2720-2749. [6] Lin M, Lin R, Yang C, et al. Docking to an Underwater Suspended Charging Station: Systematic Design and Experimental Tests[J]. Ocean Engineering, 2022, 249: 110766. doi: 10.1016/j.oceaneng.2022.110766 [7] 张伟, 潘珺, 宫鹏, 等. 面向UUV回收过程的单目视觉导引灯阵跟踪方法[J]. 水下无人系统学报, 2021, 29(4): 435-441. [8] Li C, Quo J, Pang Y, et al. Single Underwater Image Restoration by Blue-green Channels Dehazing and Red Channel Correction[C]//2016 IEEE International Conference on Acoustics, Speech and Signal Processing(ICASSP). Shanghai: IEEE, 2016. [9] Pedersen M, Bruslund Haurum J, et al. Detection of Marine Animals in a New Underwater Dataset with Varying Visibility[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Long Beach, CA, USA: IEEE, 2019: 18-26. [10] Lei F, Tang F, Li S. Underwater Target Detection Algorithm Based on Improved YOLOv5[J]. Journal of Marine Science and Engineering, 2022, 10(3): 310. doi: 10.3390/jmse10030310 [11] Liu S, Xu H, Lin Y, et al. Visual Navigation for Recovering an AUV by Another AUV in Shallow Water[J]. SENSORS, 2019, 19(8): 1-19. doi: 10.1109/JSEN.2019.2897393 [12] Wojke N, Bewley A, Paulus D. Simple Online and Realtime Tracking with a Deep Association Metric[J]. IEEE, 2017: 3645-3649. [13] Lepetit V, Moreno-Noguer F, Fua P. Epnp: An Accurate o (n) Solution to the PnP Problem[J]. International Journal of Computer vision, 2009, 81(2): 155-166. doi: 10.1007/s11263-008-0152-6 [14] Li S, Xu C, Xie M. A Robust O(n) Solution to the Perspective-n-point Problem[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2012, 34(7): 1444-1450. doi: 10.1109/TPAMI.2012.41 [15] Han B, Sun Y, Li M, et al. Influence of a Watertight Optical Window with Plain Glass on the Light Distribution of an LED Transmitter for Underwater Wireless Optical Communications[J]. Applied Optics, 2022, 61(13): 3720-3728. doi: 10.1364/AO.454809 -

下载:

下载: